Controlling Data Center Congestion – Part 2

Intro

Today’s key challenge facing data center operators is delivering high-performance and low-latency services while consuming relatively low energy. Reducing new deployment costs, improving resiliency, lowering operational expenses, and increasing flexibility are critical for meeting SLAs. Optimizing your network throughput is essential to meeting these objectives. One way to achieve this is through Remote Direct Memory Access (RDMA), which bypasses the CPU and minimizes data transfer times by leveraging direct data transfers to and from memory. Our earlier blog post gave an overview of priority-based flow control (PFC), explicit congestion notification (ECN), and data center quantized congestion notification (DCQCN). We’ll examine how RDMA and RDMA over Converged Ethernet (RoCEv2) leverage DCQCN to achieve ultra-low latencies and high throughputs to help relieve network congestion.

DCQCN for RDMA

RDMA is designed to be lossless, meaning there are no lost packets due to buffer overflow at a switch. PFC is a simple way to control congestion by using pause frames. PFC can prevent packet loss but has many disadvantages, such as unfairness, victim flows, and deadlock. Besides, you cannot address congestion only by PFC and need flow-level rate control at the sources on endpoints. With DCQCN, the switch marks a packet with ECN based on the probability that the egress queue length exceeds a threshold. When the ECN-marked packet arrives, the receiver sends a Congestion Notification Packet (CNP) to the sender. The sender adjusts its transmission rate based on whether it has received a CNP. This DCQCN strategy is unique in two ways:

(1) it does not require a destination acknowledgment for releasing the congestion, and

(2) it does not require a truly lossless network.

You can optimize DCQCN by varying parameters like a timer between CNPs, byte counter defining the bytes received on a flow without CNPs, additive and hyper increase steps to recover flows faster, and finally, CNP interval. Thus, by combining PFC, ECN, and DCQCN with optimized parameters for each application and storage use case, you can provide a near-lossless network for RDMA and RoCEv2.

Remote Direct Memory Access (RDMA)

RDMA enables data transfer directly between the memory of one device and another device’s memory. In contrast, in conventional TCP/IP networks, data transfer relies on the CPU to copy data from the memory to the network interface and vice versa. RDMA bypasses the CPU and minimizes data transfer times by leveraging direct data transfers to and from memory. RDMA also enables network devices to use their system memory as a communication channel, which offers many advantages that include:

• Simplifying network topology since data transfer does not need to follow the path of the data center network.

• Enabling data transfer at tens of gigabytes per second with the memory being an extremely high-bandwidth channel.

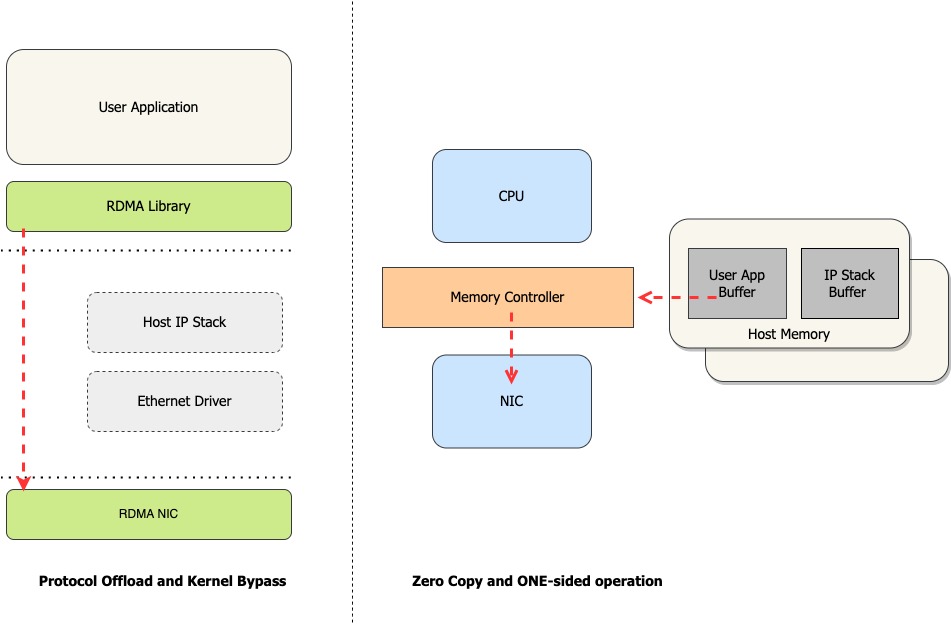

• Transferring data in only a few microseconds because of the memory’s low latency. The diagrams below highlight some of the standout features of RDMA, like kernel bypass, zero-copy, protocol offload, and one-sided operations.

Kernel Bypass: Provides direct access to I/O services via the userspace bypassing the kernel

Protocol Offload: CPU resources are freed from network protocol processing such as segmentation, reassembly, delivery guarantees, etc.

Zero-copy: Eliminates multiple copies of I/O data by providing direct access to userspace memory buffers for I/O devices

One-sided Operation: Asynchronous processing results in the receiving end being freed from processing and scheduling CPU cycles during the Read/Write operations

RoCEv2

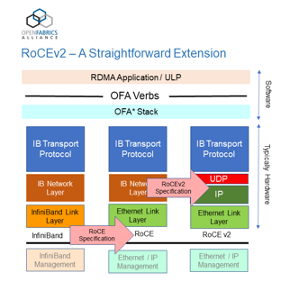

The RoCE protocol was introduced as a standard for RDMA over Ethernet, which was updated to become RoCEv2. RoCEv2 provides a reliable, high-performance, low-latency, low-overhead, and low-cost solution for transporting data between servers and servers and storage. RoCEv2 is also routable and benefits from all the advantages in scalability that come with it. It also offers important security advantages, mainly due to its lower latency. The image below illustrates the RoCEv2 framework.

Source: OpenFabrics Alliance

RoCEv2 replaces InfiniBand’s transport layer with the Ethernet data link layer. The Ethernet layer demonstrates less lossy characteristics with the inherent RDMA benefits and congestion control mechanisms, including PFC, ECN, and DCQCN. RDMA and RoCEv2 are now extensively used in software-defined data centers, software-defined networks, software-defined storage, private clouds, the Internet of Things, databases, analytics, and machine learning solutions.

Keysight’s IxLoad offers a comprehensive solution to test the RoCEv2 performance of various network equipment — like individual switches or entire switch fabrics — so that network equipment manufacturers can bring high-quality products to market faster. Data center owners and operators can also validate new architecture designs before rolling to production or running comparative tests for various network equipment supporting RoCEv2 environments.

Driven by IxLoad – the test application for measuring the quality of experience (QoE) of real-time, business-critical applications with converged multiplay service emulations is Keysight’s Data Center Storage 100GE Test Load Module, a unique platform for validating RoCEv2 implementations and parameter settings of converged data center switches.

Read the datasheet for more details about IxLoad’s capabilities and RoCEv2.

Stay tuned for our next blog, where we’ll share our experience testing Single ASIC vs. Multi-ASIC fabric switches in a lossy vs. lossless network and how PFC and RDMA are tested in a SONiC-based data center.