Understanding real RoCEv2 performance

By Daniel Munteanu | RoCEv2 is poised for takeoff. Remote Direct Memory Access (RDMA), is a well-known technology that brings major benefits when it comes to high-performance computing or storage applications, by enabling memory data transfers between applications over a network without involving the host’s CPU (by having the application communicate directly to an RDMA-enabled network card, bypassing the host's TCP/IP stack). It enables efficient data movement at high throughput, low latency, and low CPU overhead, which also results in reduced power, cooling, and rack space requirements. Initially, RDMA was defined to work over InfiniBand network stacks and this hindered its adoption.

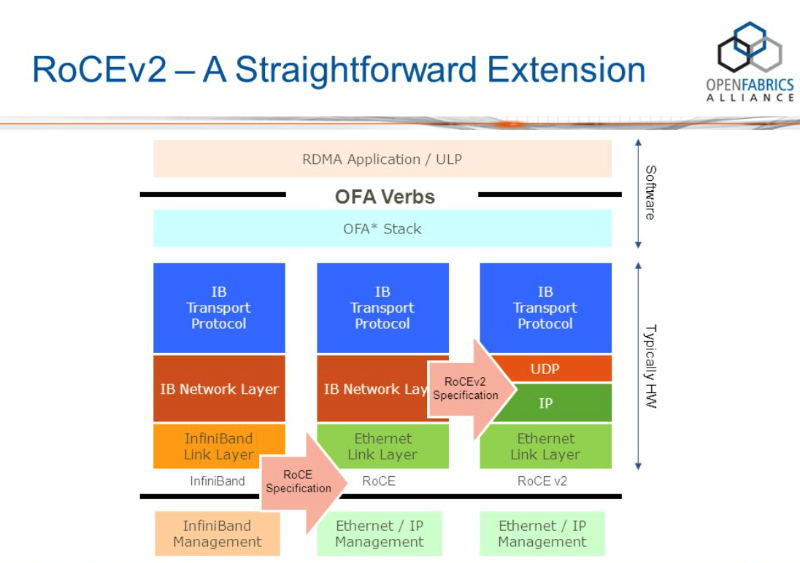

RMDA began to attract more and more attention starting in 2010 when InfiniBand Trade Association (IBTA) released the first specification for running RMDA over Converged Ethernet networks (RoCE). The initial specifications, however, limited RoCE deployments to a single Layer 2 domain as there were no routing capabilities for the RoCE encapsulated frames. In 2014, IBTA announced RoCEv2, which updates the initial RoCE specification to enable routing across Layer 3 networks, making it much more suitable for hyperscale data center networks and enterprise data centers alike.

*source: https://slideplayer.com/slide/4625194/

Since then, more and more organizations started to adopt RoCEv2 in their data centers, which allowed them to converge more and more applications (like high-performance computing, storage, and recently machine learning and artificial intelligence-based applications) over the ubiquitous Ethernet networks. This has resulted in important cost savings when it comes to network build-out and maintenance as well as finding personnel with the right expertise.

Among many others, one of the largest data centers in the world that is employing RDMA is Microsoft Azure, achieving significant COGS saving in CPU requirements (details here).

It’s time to address the unknowns with realistic, stateful testing

Despite the increased adoption of RDMA and in particular RoCEv2, there is a gap when it comes to understanding the actual performance of RoCEv2 within real deployments, optimal configurations, and requirements of the underlying infrastructure.

For most RoCEv2 deployments, the switch fabric performance plays a critical role in the overall solution’s capabilities, therefore it is paramount to measure relevant network KPIs like total throughput, packet loss, latency, traffic fairness, convergence times, and network stability. Such KPIs, need to be measured under different conditions (like various degrees of congestion or network events and link down/flapping) as well as under different network configurations (buffer size, ECN settings, traffic class segregation parameters, etc.) using traffic that is as close as possible to the product traffic (packet size, message length, number of queue pairs (Q-pair), etc.) eventually combining RoCE with other TCP-based traffic between multiple senders and receivers.

Traditionally RoCEv2-based testing was performed using homegrown solutions. However, this approach had many shortcomings like being expensive to build (as they required real servers), difficult to manage (centrally controlling the software on all the servers), limited in scale and performance, lack of relevant statistics, and very difficult to get repeatable and hard quantifiable results.

Another approach that is being proposed is to validate with stateless traffic. But this falls short because one of the main benefits of RoCEv2 is congestion control per Q-pair, not just PFC, to ensure a near-lossless fabric — and stateless traffic cannot validate congestion control per Q-pair. For this, you need stateful RoCEv2 traffic with parameterization control over the explicit congestion notifications and DCQCN congestion control algorithm to correctly optimize buffering in a data center Ethernet switching fabric.

There is an increasing need to accurately qualify and quantify RoCEv2 performance of various data center switch fabrics considering the requirements of the applications running on these technologies. A proper test tool to meet this need must have the following characteristics:

- Proper, stateful RoCEv2 implementation with granular control over relevant parameters related to ECN, CNP, DCQCN, etc.

- High-performance, up to line-rate traffic generation of RoCEv2 and other TCP/HTTP workloads

- Repeatable tests with predictable and configurable traffic patterns

- Comprehensive granular statistics to help isolate issues

- Emulate realistic storage and high-performance compute (HPC) workloads– simulate traffic patterns, both bursty and non-bursty RDMA traffic using RDMA Jobs. (RDMA Job is a Read/Write transaction sequence between a controller and worker nodes in HPC cluster).

Keysight’s IxLoad offers a comprehensive solution to test RoCEv2 performance of various network equipment — like individual switches or entire switch fabrics — so that network equipment manufactures can bring products to market faster and with high quality. Also, data center owners and operators can validate new architecture designs before rolling to production or run comparative tests for various network equipment supporting RoCEv2 environments.

For more details on IxLoad’s capabilities related to RoCEv2 please refer to the following datasheet.