Hyperscale data center environments challenging the status quo of traditional network security testing

“The secret of change is to focus all of your energy not on fighting the old but building the new” – Socrates.

Every significant innovation in technology has been accompanied with a massive initiative to get them deployed, accessible, and adapted. While change brings a certain amount of uncertainty and anxiousness, it is also the most proven way of making progress. Incidentally, hyperscale data centers are right now in the midst of going through a tectonic shift that will change them to the very core. This blog discusses some of the challenges that will come, from the security testing perspective, while they adapt these changes.

What’s happening in hyperscale data centers?

Data centers are moving from their usual brick and mortar locations to the cloud (not literally, but figuratively). More companies are deciding to move a portion of their data centers from just a few locations that belonged to them to service providers/cloud providers. This comes with some obvious benefits like more redundancies, easier scalability, and the expertise of teams who are in the business of running other companies’ data centers.

Figure: Hyperscale data centers are moving from on-prem to distributed systems

What could go wrong in this move?

As enterprises move their data centers to clouds and hybrid locations, they give up some amount of control in lieu of all the advantages it brings. This also means that you are now dependent on various service providers like the one that provides WAN services to the cloud/data center hosting company. Additionally, based on how complex or distributed the data centers are, you are also subjected to uncertainties like the unique security/data collection rules in different countries where the data center is hosted, natural calamities, and Internet speed-or the lack of it.

This unpredictability is an issue for enterprises as they need to ensure the change that comes with a host of promises doesn’t really break something that was already working. However, it is a much bigger cause of concern for the application delivery and security vendors that are servicing these enterprises. When a vendor’s product was on a customer site, they had to deal with -only- the customer environment. But now, there are a bunch of third parties involved in the process, introducing a whole lot of unknowns and security nightmares. Let’s unpack some of these things.

Unique features of hyperscale data centers that demand new ways of testing

- Testing security in a tiered data center environment: Most applications in such distributed data centers are deployed as three tiered - with a presentation, application, and database tier. It gives users the flexibility and the ability of horizontal scaling. In cloud/distributed infrastructure, each of these tiers may be deployed in a different network segment with each tier needing protection and security policies specific to the tier resulting in the need for complex but optimized security rule.

- Uncovering newly added security holes: As data centers become distributed, traffic flows through networks and gear that do not belong to you, so placing security controls in them would be impossible. Adding to that the cloud’s shared security model means that some of the security would be taken over by other vendor devices while you are responsible for the rest. From a testing perspective, this means you must not just validate that the security controls that were expected in the customer’s data centers are working fine, but also ensure that no new holes/vulnerabilities are introduced by doing this.

- Performance issues – Ensuring that the security rules don’t get the blame: As the destination, the datacenter in this case, moves further away from the source, it’s expected that there will be some latency spikes and packets lost, and some would be due to the numerous devices in between and their respective policies and rules. This makes it exceptionally difficult for security teams to correctly point out if or if not, the issues are due to the security rules or due to other factors such as those mentioned.

- Cloud dynamism resulting in new security challenges: One of the fabulous features of cloud-scale is the ability to expand or contract data centers on demand. Elasticity of server infrastructure, containerized networks, and autoscaling policies all enable cloud networks to expand or contract based on demands. This however also brings a unique challenge for security systems as they now have to protect the systems that may pop up for a short duration of time and disappear.

- Overall Chaos: Distributed systems with many dependencies is a perfect recipe for chaos as components can go down, accesses can be blocked, natural calamities can occur, instances can be quarantined/shutdown. Chaos testing for security requires replicating such events that can happen in those environments and ensure The security systems are resilient enough to live and thrive in such chaos

Conclusion

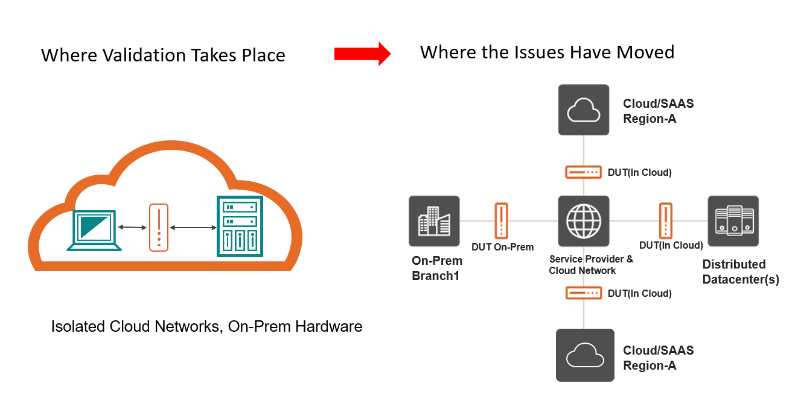

The new and unique problems in distributed hyperscale data centers needs a 180-degree shift in testing strategies where vendors need to change the testing environments that were a closed/on-prem to distributed multi-tiered topologies that accurately represent the modern hyperscale data centers and uncover and fix any security loopholes.

Figure: It’s time to move your validation to the test topologies reflecting real-world use of your products

Keysight’s latest offering CyPerf has been designed to facilitate these 180 degree shift in security testing demanded by the modern hyperscale networks. CyPerf’s agents are uniquely designed to enable them getting deployed in hybrid/multi-cloud environments. The agents capability to generate high performance and realistic application mix traffic combined with malware and exploits enables testing and validation of security tools/policies/configurations and also qualify their performance impacts. You can learn more about CyPerf here.